Quote:

Originally Posted by JoelHruska

What would you call an approach like ESRGAN? According to the description of the model:

"how do we recover the finer texture details when we super-resolve at large upscaling factors? ... Our deep residual network is able to recover photo-realistic textures from heavily downsampled images on public benchmarks."

They use phrases like "recover" as opposed to "approximate" or "repaint." Are they obfuscating their own approach, or are they one of the models that actually recovers data?

|

Hey Joel,

sorry for the long delay, "life to do"

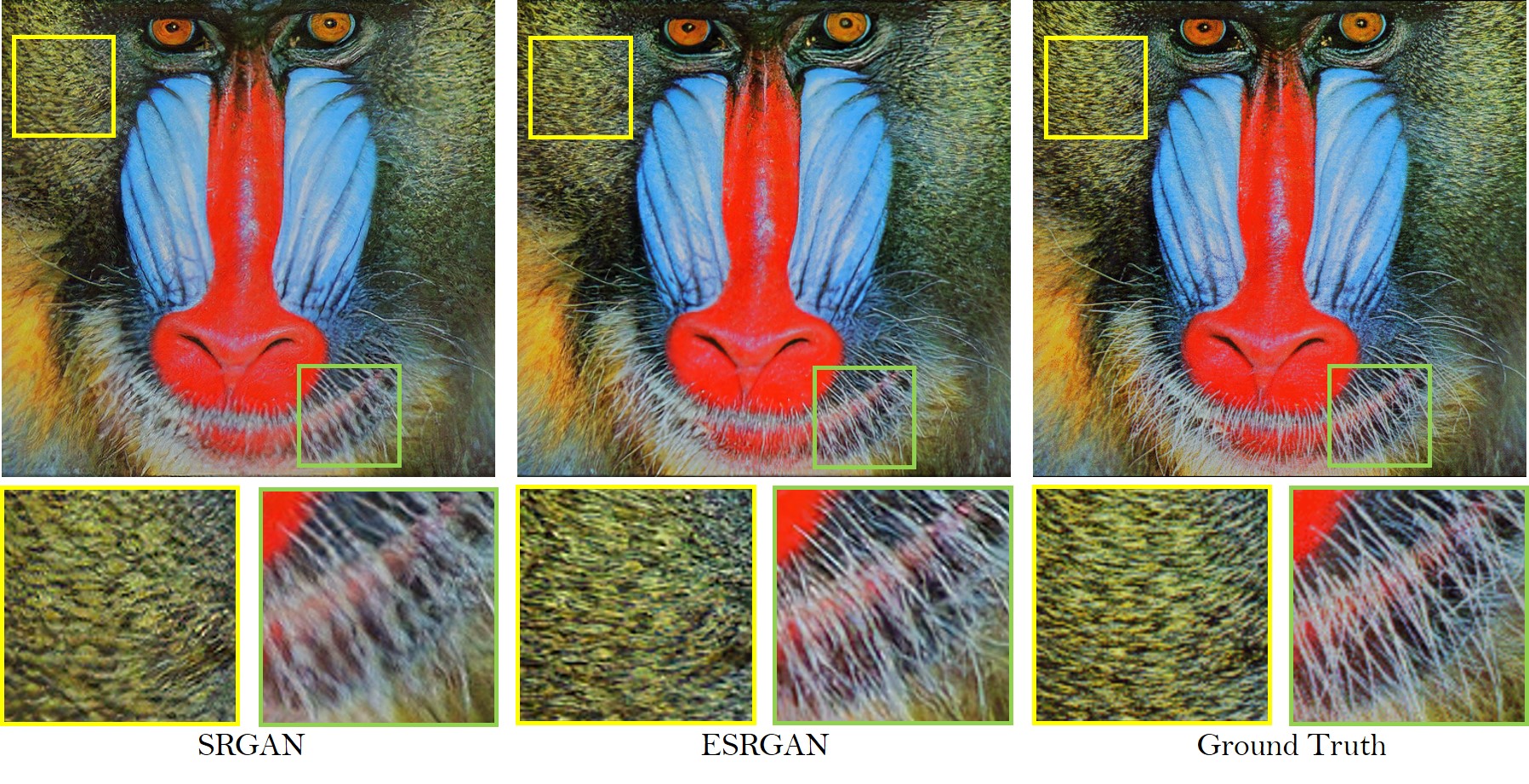

ESRGAN actually "makes stuff" up. It uses models which are trained on a certain type of content and tries to recognize what it sees and then repaints what it thinks it sees. Itīs pretty obvious if we take a look at some of the authorīs example pictures:

If you compare "HR" (high resolution = the original) or "ground truth" to the ESRGAN Example and look at the fine details, itīs eays to see, that the bamboo's beard looks good - but the hair are not the same ones as in the original. Also the grass looks rather detailed and good, but the single straws are not all the ones in the Original.

ESRGAN also only uses single frames, so itīs not aware of the prevoius and following frames in a video.

Therefore, it works quite good on single images IF your model is trained for the kind of content you want to upscale. There are quite a few trained models out there, named "faces", "Wood", "maps", etc... some are for realistic fotografs, others for special content, many for old 8 Bit Games etc...

So when they claim to "recover", what they actually are saying is: "we recognize hair, so we put hair there"... To be fair, itīs just teh terminology used in this field.. if one looks up the research papers, the distinction of which mechanism does what is not hung up on terms like "recover"

Although some outputs are very impressive, the limits of this apporach is obvious as soon as you have such low resolution that the guesswork at hand simply is making up false details. A very small shot of an actor shot from the side simply isnīt enough detail to pick up. Also, the model should be trained for the specific content.. Which most arenīt.. VEAI uses generic models, not "Scifi from the 90s" trained ones...

My proposal at Topaz was to train models for specific usecases - There is "DS9 - like looking" footage ou there which exists in HD, so a training set could be compiled and trained upon... In the case if ESRGAN, we could do it ourselfs, there are many tutorials out there how to set up training.

Of course, there are other "AI-Upscalers/denoisers/etc..." out there, in fact, the list ist enormous (the following list is just "a few" examples):

https://awesomeopensource.com/projects/super-resolution

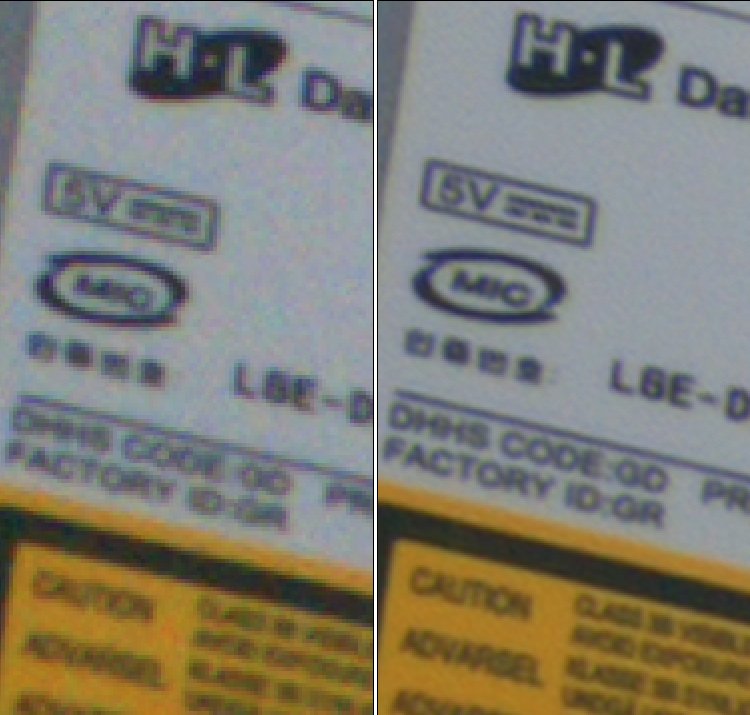

With video, we have another source of detail, we can "tap into"... The previous and following frames often contain the same objects as the current one. Often so called "super resolution" methods can take many frames into account and mostly by subsampling are able to recover details. One good illustration of the basic principal in my oppinion is the somewhat older software photoaccute, which works on multiple shots from the same scene in order to process Fotos. This screenshot frmo the manufacturers website illustrates what can be done if several pictures are combined:

With video, itīs a little more complicated, but research as gone a long way, so there are algos which are able to recover some lost details in video - the screenshots in the above post with the NNEEDI comparison are a good example. The red marked line on the spaceship is a recovered detail which only appears in the AI-Version of the upscale.

One example out of my tests (SG1, taken from NTSC DVD):

Some of the numbers are revealed, some look a little more "generic", maybe I get some more detail out of this (challenge accepted... hehe)... The workflow chain for this is... ugly... but VEAI is involved at some stage to do the upscaling (Artemis LQ in this example, a version from a few weeks ago...).

One IMO powerfull technique which is able to do this is SPMC:

https://eng.uber.com/research/detail...er-resolution/

A good example (taken from the author's paper):

There is a lot going on in this field - personaly I am always torn between "finish it up" and "oh, ah better method arose, letīs start from scratch all over again"

I hope my long writing made some sense and helped a little..