Welcome to Doom9's Forum, THE in-place to be for everyone interested in DVD conversion. Before you start posting please read the forum rules. By posting to this forum you agree to abide by the rules. |

|

|

#25341 | Link |

|

Registered User

Join Date: Jan 2008

Posts: 589

|

One thing to note is that to be able to see one lone frame drop (my current issue) you need to have reasonably accurate video playback in the first place (i.e. using smooth motion or matching refresh rate). If your video playback is not smooth to begin with (e.g. "raw" 24p@60Hz) then it is unlikely you will notice a frame drop, because it will be buried into the overall judder.

|

|

|

|

|

|

#25342 | Link |

|

Registered User

Join Date: Feb 2014

Posts: 162

|

Besides nearest neighbor which is the lowest quality setting, all the other settings from bilinear to NNEDI3 I cannot tell any difference between them. To make things worse I can't tell nearest neighbor from NNEDI3 in chroma upscaling, lol! How....? Do you really need a projector to see the difference?

|

|

|

|

|

|

#25343 | Link |

|

QB the Slayer

Join Date: Feb 2011

Location: Toronto

Posts: 697

|

One thing I have noticed about trying to tell the differences between algorithms... watching stills/frozen frames/same scene over and over doesn't seem to help. But I DO notice the differences if I watch for extended periods of time. It's more about NOT noticing things.

QB

__________________

|

|

|

|

|

|

#25344 | Link |

|

Registered User

Join Date: Aug 2007

Location: Fort Wayn,Indiana

Posts: 52

|

Had a darbee hooked up for a short time before needi32 and

was ok with a panny 7000. For me it is not needed with my current Dlp projector and latest Madvr. Tried to do a smaller image not red on the projector just trying to post an image on sharpness with Madvr removed for not posting correctly

Last edited by GREG1292; 27th March 2014 at 01:38. |

|

|

|

|

|

#25346 | Link | |

|

Registered User

Join Date: Feb 2008

Posts: 45

|

Quote:

http://www.avsforum.com/t/1477339/450#post_24514253 Here is a summary: - PCI Express version (1.1 vs 2.0 vs 3.0) is the greatest factor that affects OpenCL <-> D3D9 interoperation in AMD GPU. madshi speculates that AMD internally does the interop by using some sort of copyback between CPU RAM and GPU RAM. - Haswell PCIe 3.0 is the fastest, then IVB PCIe 3.0 (3.0 is supported by only Core i5 and higher), then AMD (Kaveri) in the benchmark. However, the difference between them is smaller in the real-world video playback (measured by rendering time). - The lower the PCIe link speed is, the higher the GPU utilization (meausured with HWiNFO64) is. I am not sure how to interpret it. - If you insert a device in the second or third PCIe 3.0 x16 slot of a Z87 chipset motherboard, the graphics card works only in PCIe 3.0 x8 or even x4 link, that is of the same bandwidth as PCIe 2.0 x16 or PCIe 1.1 x16. So be careful. In H87 / B85 chipset motherboards, there is no such problem, because the second PCIe x16 slot (if exists) is connected to the chipset (and works at 2.0 x4).  BTW using Bilinear with NNEDI3 is to fill the gap between Jinc3 and N16 x BC75AR, and the gap between N16 x BC75AR and N32 x BC75AR. Sometimes GPU may not be powerful enough for N16 x BC75AR, but enough for N16 x Bilinear. PQ is still better than Jinc3 in most cases. Lanczos is very close to Bicubic in quality and I don't think it's worth its own level. Maybe level 2a = Bicubic, level 2b = Lanczos. There is a huge gap in both quality and speed between level 1, 2, 3, 4 and 5, while the difference in quality between a and b is very small (that's why I chose "a", "b"; "c' could be Jinc, but the difference between b and c is very small and NNEDI3 x c is too slow for most cases). Last edited by renethx; 27th March 2014 at 13:04. |

|

|

|

|

|

|

#25349 | Link | |

|

Registered User

Join Date: May 2012

Posts: 447

|

Edit: Hah! It seems Kalanoch had the same idea - I guess I should have refreshed.

Quote:

__________________

Test patterns: Grayscale yuv444p16le perceptually spaced gradient v2.1 (8-bit version), Multicolor yuv444p16le perceptually spaced gradient v2.1 (8-bit version) |

|

|

|

|

|

|

#25350 | Link |

|

( ≖‿≖)

Join Date: Jul 2011

Location: BW, Germany

Posts: 380

|

BT.2020 support

How does madVR's support for BT.2020 work? madVR does not support constant-luminance encoding at all currently? How/when will it do so in the future?

How does color management work in the presence of BT.2020? How is BT.2020 content displayed on BT.709 monitors and vice versa? Do you intend to rely on the .3dlut being created against BT.2020? How will you transform into this space? Does madVR detect and respect the --primaries tag? What does it do in the absence of a .3dlut? Do you do so with constant luminance? Which gamma transfer function do you use to de/encode BT.2020 and BT.709? How will it work moving forwards to a constant-luminance environment? How do plan on dealing with rounding/clipping artifacts on BT.709 and BT.2020 source 3dluts, especially for wide gamut profiles? When will madVR support .ICC profiles, when will madVR support changing .ICC profiles at runtime (eg. if you move between monitors)?

__________________

Forget about my old .3dlut stuff, just use mpv if you want accurate color management |

|

|

|

|

|

#25351 | Link | |

|

Kid for Today

Join Date: Aug 2004

Posts: 3,477

|

So I'm trying to put my GPU fan speed evil plan to action, using BarelyClocked.exe but it's no workee atm, any idea please?

30% is only here to easily notice the fan speed increase but ideally I want mVR to set the fan speed to 20% while a movie is running and put it back to 18%(inaudible) once my media player is closed. The command lines do work fine on their own and the profile is properly loaded as it would appear  Quote:

I would also appreciate a way to disable the crash reporter of mVR as PotP would appear to make it crash randomly when using its seamless playback feature. Last edited by leeperry; 27th March 2014 at 18:31. |

|

|

|

|

|

|

#25352 | Link |

|

Registered User

Join Date: Feb 2014

Posts: 162

|

Speaking of color management and monitor calibration, there was a very active discussion on gamma a week ago, then it suddenly died, what was the conclusion? Is 2.2 still the best gamma to use to calibrate monitors instead of sGRB and L*?

|

|

|

|

|

|

#25353 | Link | |

|

Registered User

Join Date: Oct 2012

Posts: 7,926

|

Quote:

so you don't need a 3d lut a 3d lut is just more accurate than a noraml calibration with a icc file. |

|

|

|

|

|

|

#25354 | Link |

|

( ≖‿≖)

Join Date: Jul 2011

Location: BW, Germany

Posts: 380

|

Okay, good to know that the monitor gamut is customizable. I don't understand this line though, what do you mean “normal calibration with a icc file”? I thought madVR *only* supports calibration via 3dlut, or has that changed? Won't it generate a 3dlut from the .icc either way? Doing the full ICC calculation at runtime on the GPU seems like an odd thing to do, or does it only extract the primaries/transfer characteristics and implement those, ignoring detailed stuff like LUT tables in the profile?

__________________

Forget about my old .3dlut stuff, just use mpv if you want accurate color management |

|

|

|

|

|

#25355 | Link | ||

|

Registered User

Join Date: Jan 2008

Posts: 589

|

Quote:

For comparison, BT.1886 is equivalent to 2.4 pure power law on a perfect screen with infinitely deep black levels, and is roughly similar to sRGB gamma on typical IPS screens that have a 1000:1 contrast ratio. Besides, what the hell is "L* gamma"? Quote:

madVR does not support ICC, and as far as I know madshi has no plans to implement it. You're stuck with 3DLUTs which are much less convenient to use. I have no idea how madVR does gamut mapping when you feed it BT.2020 content and ask it to convert it to BT.709. That being said, by generating a 3DLUT using a CMS such as Argyll you can get complete control over the gamut mapping since it becomes the responsibility of the 3DLUT, not madVR's. Last edited by e-t172; 27th March 2014 at 17:14. |

||

|

|

|

|

|

#25357 | Link | |

|

Registered User

Join Date: Feb 2014

Posts: 162

|

Quote:

|

|

|

|

|

|

|

#25358 | Link |

|

Registered User

Join Date: Jan 2008

Posts: 589

|

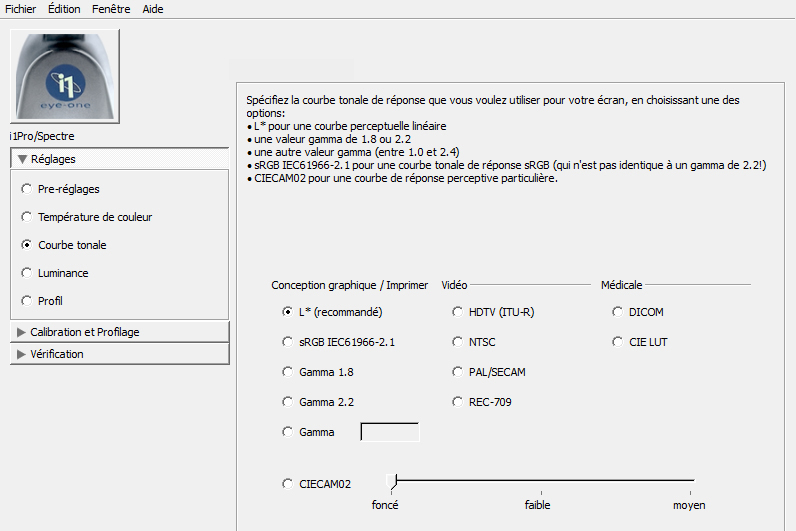

Okay, so from a quick Google search it seems L* is a weird gamma spec that has nothing to do with video (it seems more intended for printing or other editing work). Don't use it.

Your software doesn't seem to have an option for BT.1886, which is not surprising (maybe that's what "HDTV (ITU-R)" is referring to but that's not clear from your screenshot). In that case a good approximation is to use sRGB assuming you're using a typical IPS screen (1000:1 contrast ratio). If you want to have complete control other what you're doing I would recommend using Argyll CMS to calibrate your screen and generate a 3DLUT, but that's more technically involved. |

|

|

|

|

|

#25359 | Link | ||

|

Registered User

Join Date: Dec 2012

Location: Neverland, Brazil

Posts: 169

|

Quote:

Quote:

The only time bilinear made any outstanding difference for me when quality was concerned was with the upscale algorithm, but in chroma it shouldn't be much of a trade-off. madshi told me that the GPU power of bilinear vs any other algorithm is like day and night so if someone is looking to try NNEDI it makes sense. Unfortunately I don't currently possess the hardware to try all of those levels so I appreciate your thoughts on them. Those graphs are also based on a 1080p>1440p upscale if I'm not wrong, if I could I'd scale the performance data but that's probably not the best way to go about it. I'd like to know what results 720p>1080p would give.

__________________

madVR scaling algorithms chart - based on performance x quality | KCP - A (cute) quality-oriented codec pack |

||

|

|

|

|

|

#25360 | Link |

|

Registered User

Join Date: Mar 2014

Posts: 30

|

So i did a lot of testing today, if or if not NNEDI is really worth the trouble and i came to the conclusion, that it's not. I don't know what you guys are seeing or believe what you're seeing, but i sometimes think, i'm in a homeopathy-forum.

Here's is a test for you: I took 3 screenshots, one with NNEDI 32 neurons (bicubic upscaling and CR downscaling), one with JAR 4 taps and one with BC75AR upscaling Which one is NNEDI and which are the others? Pic A Pic B Pic C All Pics are from a HQ-720p Source. Last edited by daglax; 27th March 2014 at 18:41. |

|

|

|

|

| Tags |

| direct compute, dithering, error diffusion, madvr, ngu, nnedi3, quality, renderer, scaling, uhd upscaling, upsampling |

|

|