Welcome to Doom9's Forum, THE in-place to be for everyone interested in DVD conversion. Before you start posting please read the forum rules. By posting to this forum you agree to abide by the rules. |

|

|

#21 | Link | |

|

Registered User

Join Date: Aug 2010

Location: Athens, Greece

Posts: 2,901

|

Quote:

You seem desperate to prove Threadripper is lame compared to Skylake-X, by manipulating the user conditions. Do you really think that the IPC advantage of a CPU has anything to do with a 10C/20T vs 16C/32T comparison ? At the end of the day we are comparing final products and not just architectures at very specific situations. Because those two CPUs have the same price. Skylake-X is a choice for Intel's fanboys or for people who can afford burn money in the fireplace during the winter.

__________________

Win 10 x64 (19042.572) - Core i5-2400 - Radeon RX 470 (20.10.1) HEVC decoding benchmarks H.264 DXVA Benchmarks for all |

|

|

|

|

|

|

#22 | Link | ||

|

Registered User

Join Date: May 2018

Posts: 2

|

Quote:

I have both, the 7960X and 1950X. Even if I had only the 7900X, I'd still use it for HEVC encoding rather than the 1950X due to speed and higher efficiency.   Obviously in other multithreaded workloads the 1950X will annihilate the 7900X, but that's not the case with X265. Quote:

|

||

|

|

|

|

|

#23 | Link | |

|

Testeur de codecs

Join Date: May 2003

Location: France

Posts: 2,484

|

Quote:

- 4K HEVC source at very high bitrate (~140 fps at 100% CPU charge just for stream decoding on i9-7900X and 1950X ... and Intel is really better than AMD for that) - Really high speed setting (40 fps and more for 4K x265 encoding ... !!!?). Certainely that Handbrake use high CPU charge just for 4K HEVC decoding source. I make more serious bench here with 4K source and x265 encoding with preset "medium": https://forum.doom9.org/showthread.php?t=174393 Code:

|----------------|---------|---------|---------|---------|---------|---------|---------|---------|---------|---------|---------|--------| | CPU | x264 | x265 | LAVC | auto | MMX2 | SSE | SSE2 | SSE3 | SSE4 | AVX | AVX2 | All | |----------------|---------|---------|---------|---------|---------|---------|---------|---------|---------|---------|---------|--------| | 1950X | 37.59 | 6.50 | 136 | 4.65 | 2.02 | 2.00 | 2.95 | 3.10 | 3.89 | 4.10 | 4.26 | N/A | | Core i9-7900X | 29.37 | 5.47 | 145 | 4.38 | 1.35 | 1.35 | 2.14 | 2.32 | 3.49 | 3.50 | 4.30 | N/A | - 1950x@stock 16C/32T is faster than i9-7900x@stock 10C/20T for x265 4K encoding. - SSE4 at same speed and same core number is on par for Intel and AMD for x265 encoding. - AVX2 produce 15-20% speed improuvement for Intel vs AMD in x265 encoding (on all modern CPU) - AVX2 is not really efficient for x264: at same speed and same core number Intel and AMD are on par for speed encoding.

__________________

Le Sagittaire ... ;-) 1- Ateme AVC or x264 2- VP7 or RV10 only for anime 3- XviD, DivX or WMV9 Last edited by Sagittaire; 24th May 2018 at 22:56. |

|

|

|

|

|

|

#24 | Link |

|

Registered User

Join Date: Jun 2017

Posts: 89

|

There is one point from @The Stilt that still might stand:

Efficiency. It seems to me like some in this forum consider speed only. I am not sure about this particular case, because I have neither of these high-end CPUs, but I investigated a lot before I bought my current CPU. If the Intel is slower, but still uses less power over the entire encoding time, it is the more efficient CPU and should be the better choice at the same price - at least for someone who does a lot of encoding. At the end of the day - it would be cheaper to run. Again, I am not saying this is the case here, I simply don't know. I bought a Kaby Lake CPU back in the day and I looked at most of the lineup from G4560 to i7-7700K. Between these CPUs, the most efficient ones were i5-7400 and i5-7500, both non-HT Quads. Neither the cheapest, nor the fastest - but the most efficient, so the cheapest to run. I ended up buying an i5-7500... |

|

|

|

|

|

#25 | Link | |

|

RipBot264 author

Join Date: May 2006

Location: Poland

Posts: 7,815

|

Quote:

__________________

Windows 7 Image Updater - SkyLake\KabyLake\CoffeLake\Ryzen Threadripper |

|

|

|

|

|

|

#26 | Link |

|

Registered User

Join Date: May 2009

Posts: 331

|

I'd take both Sagittare's benchmark and Atak's words over some random's Anandtech/Intel worshiping. Anandtech hasn't been impartial for years and nothing on their p"review" site can be trusted. Heck none of the review sites properly use x265 in their benchmarks.

And let's be clear. The 7900x uses far more power than the 1950x. TDP doesn't mean anything if you can't stick to it. Last edited by RanmaCanada; 26th May 2018 at 04:39. Reason: adding ending |

|

|

|

|

|

#27 | Link |

|

Registered User

Join Date: Feb 2002

Location: San Jose, California

Posts: 4,407

|

At 4.0 GHz (max OC) the 1950X uses a lot more power than at stock, just like the 7900X. The 1950X does use a little less power in most situations but it isn't a major difference, 16 cores v.s. 10. Power numbers can move around a lot too, depending on the motherboard (voltages and exact power saving options chosen).

Also, it is hard to saturate 16 cores with 1080p encodes. There are times when 10 faster cores are better. The 1950X is a great CPU for encoding but it is hardly so black and white, the 7900X is great too. For pure x265 price/performance easily goes the the 1950X but it is slower in many situations and not that much more efficient.

__________________

madVR options explained |

|

|

|

|

|

#28 | Link |

|

RipBot264 author

Join Date: May 2006

Location: Poland

Posts: 7,815

|

If you do some extra filtering in avisynth (Resize from 2160p to 1080p + HDRtoSDR tone mapping + MDegrain denoising) then saturating all 32 threads in Threadripper won't be a problem.

My example script I use while encoding UHD HDR to FHD SDR Code:

#MT

Import("C:\Users\Dave\Documents\Delphi_Projects\RipBot264\_Compiled\Tools\AviSynth plugins\Scripts\MTmodes.avs")

#PREFETCH_LIMIT=0

#VideoSource

LoadPlugin("C:\Users\Dave\Documents\Delphi_Projects\RipBot264\_Compiled\Tools\AviSynth plugins\ffms\ffms_latest\x64\ffms2.dll")

video=FFVideoSource("E:\_Video_Samples\mkv\Passengers_2016_4K.mkv",cachefile = "C:\Temp\RipBot264temp\job1\Passengers_2016_4K.mkv.ffindex")

#Crop

video=Crop(video,0,278,-0,-278)

#Resize

LoadPlugin("C:\Users\Dave\Documents\Delphi_Projects\RipBot264\_Compiled\Tools\AviSynth plugins\Plugins_JPSDR\Plugins_JPSDR.dll")

video=Spline36ResizeMT(video,1920,800,SetAffinity=false).Sharpen(0.2)

#Tonemap

Loadplugin("C:\Users\Dave\Documents\Delphi_Projects\RipBot264\_Compiled\Tools\AviSynth plugins\avsresize\avsresize.dll")

Loadplugin("C:\Users\Dave\Documents\Delphi_Projects\RipBot264\_Compiled\Tools\AviSynth plugins\DGTonemap\x64\DGTonemap.dll")

video=z_ConvertFormat(video,pixel_type="RGBPS",colorspace_op="2020ncl:st2084:2020:l=>rgb:linear:2020:l", dither_type="none").DGHable

video=z_ConvertFormat(video,pixel_type="YV12",colorspace_op="rgb:linear:2020:l=>709:709:709:l",dither_type="ordered")

#Denoise

Loadplugin("C:\Users\Dave\Documents\Delphi_Projects\RipBot264\_Compiled\Tools\AviSynth plugins\mvtools\mvtools2.dll")

super=MSuper(video,pel=2)

fv1=MAnalyse(super,isb=false,delta=1,overlap=4)

bv1=MAnalyse(super,isb=true,delta=1,overlap=4)

fv2=MAnalyse(super,isb=false,delta=2,overlap=4)

bv2=MAnalyse(super,isb=true,delta=2,overlap=4)

video=MDegrain2(video,super,bv1,fv1,bv2,fv2,thSAD=400)

#Prefetch

video=Prefetch(video,8)

__________________

Windows 7 Image Updater - SkyLake\KabyLake\CoffeLake\Ryzen Threadripper Last edited by Atak_Snajpera; 27th May 2018 at 11:19. |

|

|

|

|

|

#29 | Link |

|

Registered User

Join Date: Feb 2002

Location: San Jose, California

Posts: 4,407

|

It is not that I believe you are wrong, I don't have great data here, but what does "crush" mean in terms of actual percentage performance difference? People tend to be way too.. sure.. of their CPU choices without a lot of data to back it up.

The 1950X is a great CPU for encoding, I would absolutely recommend to anyone building an encoding box on a budget, but I haven't seen any good data which shows it "crush" the 7900X in a encoding workload (e.g. a 4K x265 encode) or the 7900X using "far more power" when the 1950X is actually matching or out performing the 7900X. $/performance is not a contest but raw performance is a lot closer. What kind of FPS numbers do you get with the 1950X, and what does a 7900X get? Any data on power use for both systems?  The limited reviews posted in this thread, and what I have found elsewhere, use different workloads and have results with differences small and varied enough it is hard to draw any definitive conclusions from them. We know it is hard to saturate both CPUs and saturation has a big impact on both performance and power use. I would not be surprised to see some crushing in well threaded workloads, the 1950X has 60% more cores and each core is not that much slower. I just haven't seen any benchmarks which show it, I suspect this is because reviewers never use encoding options or workflows which properly saturate the 1950X, but this would also cause its power numbers to be lower. Some data would be great.

__________________

madVR options explained |

|

|

|

|

|

#30 | Link |

|

RipBot264 author

Join Date: May 2006

Location: Poland

Posts: 7,815

|

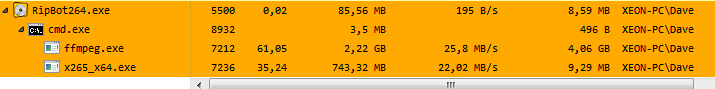

7900X shines in x265 due to superior AVX2 unit (2 times wider). However most filters I use do not use AVX2 at all! This means that CPU will be spending more time executing non AVX code (In most cases plain SSE2 code) than AVX2. So speed advantage on x265 side will shrink significantly. Just take a look how many cpu cycles decoding process (ffmpeg.exe) eats using above script on my E5-2690 (8C/16T).

__________________

Windows 7 Image Updater - SkyLake\KabyLake\CoffeLake\Ryzen Threadripper Last edited by Atak_Snajpera; 28th May 2018 at 10:33. |

|

|

|

|

|

#31 | Link | |

|

Registered User

Join Date: May 2009

Posts: 331

|

Quote:

But let's face it, until x265 can saturate more than 10? threads, the only real way to test it is to own both, and run multiple instances at the same time :P |

|

|

|

|

|

|

#32 | Link |

|

Registered User

Join Date: Feb 2002

Location: San Jose, California

Posts: 4,407

|

x265 can easily saturate 20 threads on veryslow. x265 can easily saturate 20 threads on veryslow.And I don't see any benchmarks from Sagittare that show a big performance difference and everything in this thread seems to show the 7900X is slightly faster? What did I miss?  Oh, wait I think I see. The "x265" column, I maybe don't understand what the AVX, AVX2, etc. columns are benchmarking.

__________________

madVR options explained Last edited by Asmodian; 30th May 2018 at 20:57. |

|

|

|

|

|

#33 | Link |

|

Registered User

Join Date: Aug 2010

Location: Athens, Greece

Posts: 2,901

|

Shock and Awe !

Threadripper 2000 is a 32C/64T absolute monster at 250W TDP, compatible with X399 motherboards coming on August. It's a nuke on the head of Intel: https://www.anandtech.com/show/12906...w-x399-refresh

__________________

Win 10 x64 (19042.572) - Core i5-2400 - Radeon RX 470 (20.10.1) HEVC decoding benchmarks H.264 DXVA Benchmarks for all |

|

|

|

|

|

#34 | Link |

|

Registered User

Join Date: May 2009

Posts: 331

|

Now if the price points are the same as the previous generation, this will destroy Intel's market share and everyone will have a Threadripper :P. I can't wait for this to be released. Hopefully this time we will get some real proper reviews on work loads as this goes beyond HEDT and steps into workstation/server territory.

|

|

|

|

|

|

#35 | Link |

|

Registered User

Join Date: Aug 2010

Location: Athens, Greece

Posts: 2,901

|

It seems that Intel will answer that 24C/48T Threadripper with a 22C/44T chip belonging to Skylake HCC (High Core Count), a special chip because max cores of HCC are 18C/36Τ, that could be used on existing X299 motherboards a month or two after Threadripper 2 release.

No clocks, TDP or price is available. But for 32C/64T there is no answer. There will be a response at the end of the year or early 2019 in the form of a new chipset/ board and a new CPU (Cascade Lake-X) reaching 28C/56T. But that could be extremely expensive and probably not worth its money, like most of the Intel CPUs nowadays.

__________________

Win 10 x64 (19042.572) - Core i5-2400 - Radeon RX 470 (20.10.1) HEVC decoding benchmarks H.264 DXVA Benchmarks for all |

|

|

|

|

|

#36 | Link |

|

Registered Developer

Join Date: Mar 2010

Location: Hamburg/Germany

Posts: 10,348

|

Intel HCC is a 4x5 grid layout with 2 memory controllers, so 18 CPU cores max. They can't just make a new 22 core chip without re-engineering the Die, which would basically be an entirely new CPU line.

__________________

LAV Filters - open source ffmpeg based media splitter and decoders |

|

|

|

|

|

#37 | Link |

|

Registered User

Join Date: Feb 2002

Location: San Jose, California

Posts: 4,407

|

It is from their XCC line.

__________________

madVR options explained |

|

|

|

|

|

#38 | Link |

|

Registered User

Join Date: Aug 2010

Location: Athens, Greece

Posts: 2,901

|

Guys, I had the same thoughts until I read that:

https://www.techpowerup.com/245090/i...151-processors

__________________

Win 10 x64 (19042.572) - Core i5-2400 - Radeon RX 470 (20.10.1) HEVC decoding benchmarks H.264 DXVA Benchmarks for all |

|

|

|

|

|

#39 | Link |

|

Registered Developer

Join Date: Mar 2010

Location: Hamburg/Germany

Posts: 10,348

|

Well the article says that it's not HCC but a new die. Seems unusual to me, I don't think they would sell enough of those to actually make it worth creating a new in-between die.

__________________

LAV Filters - open source ffmpeg based media splitter and decoders |

|

|

|

|

|

#40 | Link |

|

Registered User

Join Date: Aug 2010

Location: Athens, Greece

Posts: 2,901

|

It's a new die, I called it a special case of HCC, Intel should name it differently but definitely it's not a new CPU or a new CPU architecture.

__________________

Win 10 x64 (19042.572) - Core i5-2400 - Radeon RX 470 (20.10.1) HEVC decoding benchmarks H.264 DXVA Benchmarks for all |

|

|

|

|

|

|